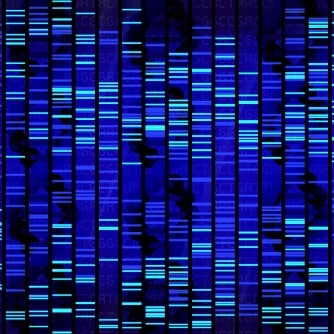

A group of New England Biolabs researchers has determined sequenced samples of DNA stored in public databases show much higher levels of low-frequency mutation errors than anticipated. The group’s paper was recently published in the popular journal Science. The team’s paper describes how they built an algorithm that calculates error rates across samples within the database. It also explains what the algorithm showed when put to use on a couple of public genome databases.

The Importance of Databases

Scientists who study the role of DNA in cell mutations that cause cancerous tumors rely on databases for their work. These databases must be highly accurate as they hold critically important sequencing data. Researchers use this data to pinpoint commonalities between groups and isolate specific trends that have the potential to provide valuable insight. These studies involve a comparison between genomes of different individuals who have low-frequency mutations and the overarching population. The findings are then used to create cancer data sets. The New England Biolabs team’s work is shifting the spotlight to the alleged accuracy of cancer data sets. It is possible that this data is not as accurate as once assumed.

About the Algorithm

The researchers devised an algorithm to gauge the accuracy of any given data set. This algorithm serves to tabulate the numbers of sequences displaying mutations as a result of damage that occurs amidst the sequencing process. It compares these sequences to those that occur naturally. The team used the algorithm to determine error rates for multiple public databases including that of the 1000 Genomes Project and a portion of the TCGA database.

The Results

Error rates were much higher than assumed. The TCGA database had a whopping 73 percent error rate while the 1000 Genomes Project had a 41 percent error rate. Unfortunately, the algorithm is incapable of identifying the cause of unnatural damage. The team believes the source stems from the techniques used from sample preparation before the actual sequencing took place. Additional algorithms have been created for sequencers to gauge their own work for mistakes, yet none have been used as of the date of this publication.

The New England Biolabs team notes that additional tools have been created that might assist in the mitigation of DNA damage that occurs during the preparatory process. The use of these tools could also serve to heighten the accuracy of public databases as time progresses.